Authors: Dimitrios V. Papadopoulos and Ioannis Gkourtzounis

Introduction

In this article our aim is to transfer the experience of a Docker-Jenkins integration to the reader which is really useful, especially concerning the Quality Assurance sector. Concepts concerning this integration are going to be analyzed so that testers can gain a new interpretation on these processes. This way, they can spend less time creating new Jenkins instances and can become more efficient by automating these procedures.

The Tools

First, we will present the tools that are going to be used for this project. These tools are well-known in the IT sector because of their functionality and their flexibility. Though, there is a crucial configuration that needs to be done in order to have the expected outcome.

Jenkins: Jenkins is an open source automation server mainly written in Java. It is responsible for automating software development processes using Continuous Integration and Continuous Delivery known as CI/CD.

Docker: Docker is responsible for the “containerization” of our preferred dependencies, libraries and other files that we want to be placed under a single package. This tool is used to read the configuration properties of these software packages and instantiate them in containers. The containers expose their services to a specific port of the host operating system, while using its kernel. This makes Docker much more lightweight than the VMs.

Cucumber: Cucumber is a test automation framework that helps testing teams to apply BDD logic. Behave-Driven Development (BDD) is a concept of testing, where human language is translated into testing code. That makes it readable and helps the business sector to integrate efficiently and sufficiently with the IT departments of software testing.

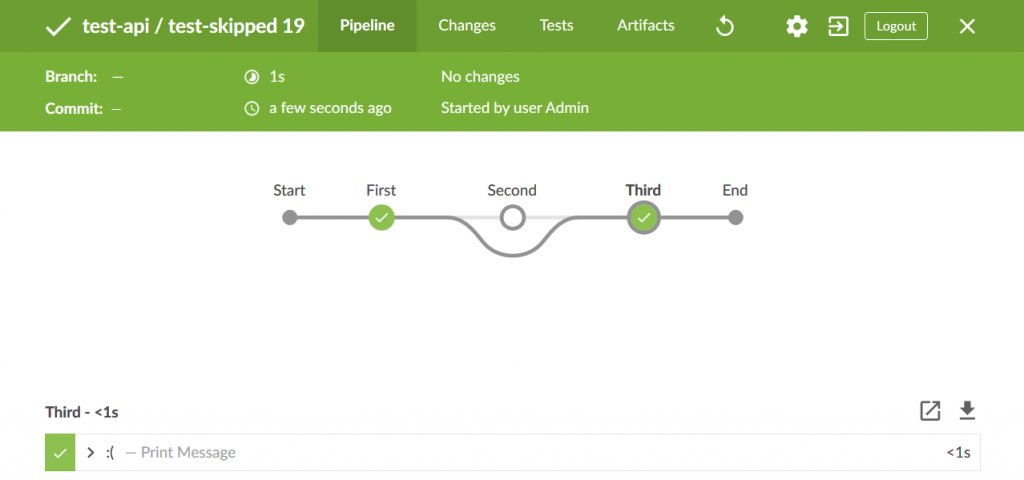

Pipeline: Is a file with a sequence of software stages/steps. These stages/steps include commands for executing specific jobs.

The Test Project

The process of integrating Docker and Jenkins is as follows. At first, we create a Docker container which will include two projects:

- The first project, is going to be a test project in Maven format, Java, Selenium a web browser automation framework, BDD Cucumber framework and pipelines written in Groovy.

- The second project will be a website, an application or generally a software product. The concept behind this, is to make the first project (test project) test the second one (software product).

In this article, we will focus only on the creation of the test project inside the Docker container.

The test project is responsible for testing a website. Selenium and Java are needed to construct the test automation framework. As soon as test cases and their steps will be prepared, following business requirements the BDD Cucumber and its functionality comes into play.

Finally, the pipelines written in Groovy will be utilized by Jenkins. There are two pipelines in this project. One for the build process, responsible for building the test project and getting quality metrics, and one for the test process, to test the actual website with our testing code. In our example, the name of the test pipeline is “Jenkinsfile.test.groovy”.

Driver Configuration

There is one vital interference we need to do in the code concerning the automation scripts. Before the creation of a Chrome driver we need to pass the following options:

options.addArguments("--headless"); // executing the scripts without interface

options.addArguments("start-maximized"); // open maximized browser

options.addArguments("disable-infobars"); // disabling info-bars

options.addArguments("--disable-extensions"); // disabling extensions

options.addArguments("--disable-gpu"); // applicable for windows OS only

options.addArguments("--disable-dev-shm-usage"); // fix limited rsc issues

options.addArguments("--no-sandbox"); // pass OS security model

These lines of code will enable Chrome driver to be executed within the specific Docker environment.

Docker Configuration

In the following paragraphs we will present the steps needed to be followed in order to create a Docker image of Jenkins. First, we create a project inside a Git repository. Then, we create a file named Dockerfile which includes all the configuration options for our Jenkins instance. It is vital to define the packages that are going to be installed along with the necessary tools like Java JDK, Chrome, Chrome driver, etc.

For example,

RUN apt-get update && apt-get install -y openjdk-8-jdk

RUN wget https://dl.google.com/linux/direct/google-chrome-stable_current_amd64.deb

RUN wget -N http://chromedriver.storage.googleapis.com/2.39/chromedriver_linux64.zip

RUN unzip chromedriver_linux64.zip

This command is responsible for installing the Java-JDK8 to our machine. Also there is a word “RUN” at the beginning. This is a Docker syntax word which determines that everything after this, will need to be executed through the command line to the Docker machine. Another useful command is the following one:

RUN wget -N http://chromedriver.storage.googleapis.com/2.39/chromedriver_linux64.zip

This command will download the chromedriver file for Linux, because it is needed by the automation scripts that use Chrome browser. If you want the Firefox, then you need to download the geckodriver. There are many more commands that need to be included. This will differ from project to project.

Another important file that we need to have is the jobs.yaml file. In this file we need to include the proper code which will create a project (Freestyle, etc.) or a file/pipeline inside the new Jenkins instance.

One more important file is the plugins.txt. This file names the plugins that are needed inside Jenkins. There are some plugins as an example:

checkstyle:latest // pull the latest version of checkstyle

cucumber:0.0.2 // pull a specific version

cucumber-report:3.16.0

configuration-as-code:latest

configuration-as-code-support:latest

Docker Build and Run

Now let’s see the commands we have to execute to create the container with the instance of Jenkins. The steps of Docker installation are not going to be described because they are clearly documented in the official Docker website (https://dockr.ly/2Qsl5FO).

After the Docker installation we need to run the following commands to create the instance:

$ docker build https://gitlab.YOUR_DOMAIN/PROJECT_PATH.git -t IMAGE_NAME

This command will build a new image named as “IMAGE_NAME” by executing the Dockerfile from the given git project. The last command which will start Jenkins, is the next one:

$ docker run -p HOST_MACHINE_PORT:DOCKER_PORT -p 50000:50000 t IMAGE_NAME

Using the above command, we run the image named as “IMAGE_NAME” under the HOST_MACHINE_PORT port which listens to the general IP of Jenkins, which is defined as DOCKER_PORT. If we need to execute it in a different port then we need to change the first number of the port. The 50000:50000 enables Docker to connect slave agents (erase it if you have SSH slaves).

Docker Result

Now we can access the Jenkins instance by navigating to the proper link with the following format: IP:HOST_MACHINE_PORT (for instance http://122.406.90.99:8080). We can login to the created instance and perform all Jenkins actions, some of those are the following:

- Open the project that contains the pipeline, configured in the jobs.yaml file

- Enter the pipeline

- Configure pipeline and Build pipeline

Check Cucumber reports (plugin installed through plugins.txt file)

Be aware for the second part in which a detailed procedure for the website container will be described. Stay tuned!